if you have any question? Call Us +91(835) 393-1494

Understanding site crawling and how web search tools slither and file sites can be a befuddling subject. Everybody unexpectedly does it a tad; however, the general ideas are something very similar. Here is a fast breakdown of things you should think concerning how web indexes creep your site. For that, there could be no more noteworthy expert community than Desire India Today.

So the thing is site crawling?

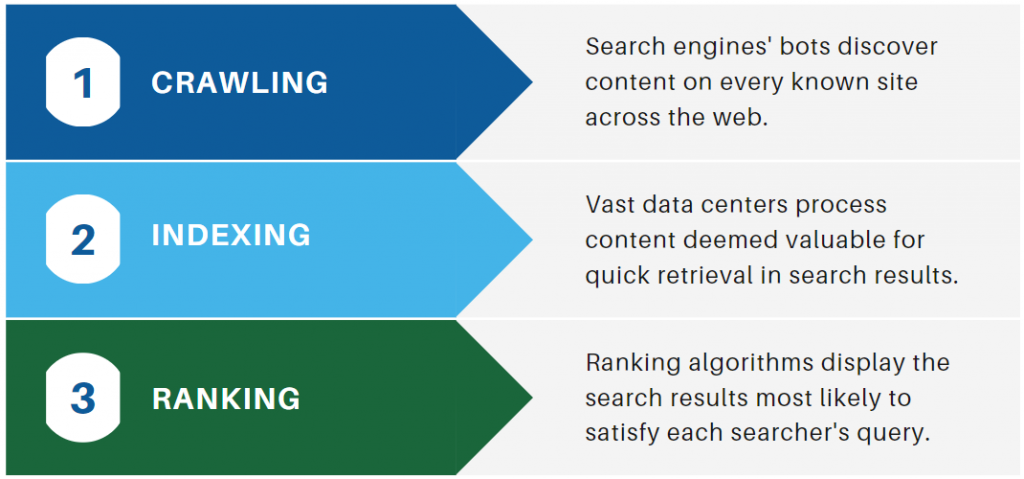

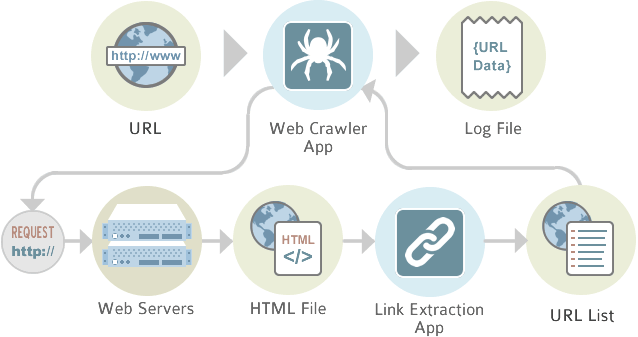

Site crawling is the computerized getting of site pages by a product interaction, the motivation behind which is to list the substance of sites so they can be looked at. The crawler dissects the substance of a page searching for connections to the following pages to bring and file.

Are there various kinds of crawlers?

There certainly are various kinds of crawlers. Yet, perhaps the main question is, "The thing that is a crawler?"

Diverse web search tools and advances have various strategies for getting a site's happy with crawlers:

- Crawls can get a preview of a site at a particular point on schedule, and afterward occasionally recrawl the whole site. This is ordinarily thought to be a "beast power" approach as the crawler is attempting to recrawl the whole site each time. This is exceptionally wasteful for clear reasons. It does, however, permit the internet searcher to have a cutting-edge duplicate of pages, so if the substance of a specific page changes, this will ultimately permit those progressions to be accessible.

- Single page creeps permit you to just slither or recrawl new or refreshed substance. There are numerous approaches to discover new or refreshed substances. These can incorporate sitemaps, RSS channels, partnership and ping administrations, or creeping calculations that can identify new substances without slithering the whole site.

Will crawlers consistently slither my site?

That is the thing that we make progress toward at DIT, however, isn't generally conceivable. Regularly, any trouble creeping a site has more to do with the actual site and less with the crawler endeavoring to slither it. The accompanying issues could make a crawler fall flat:

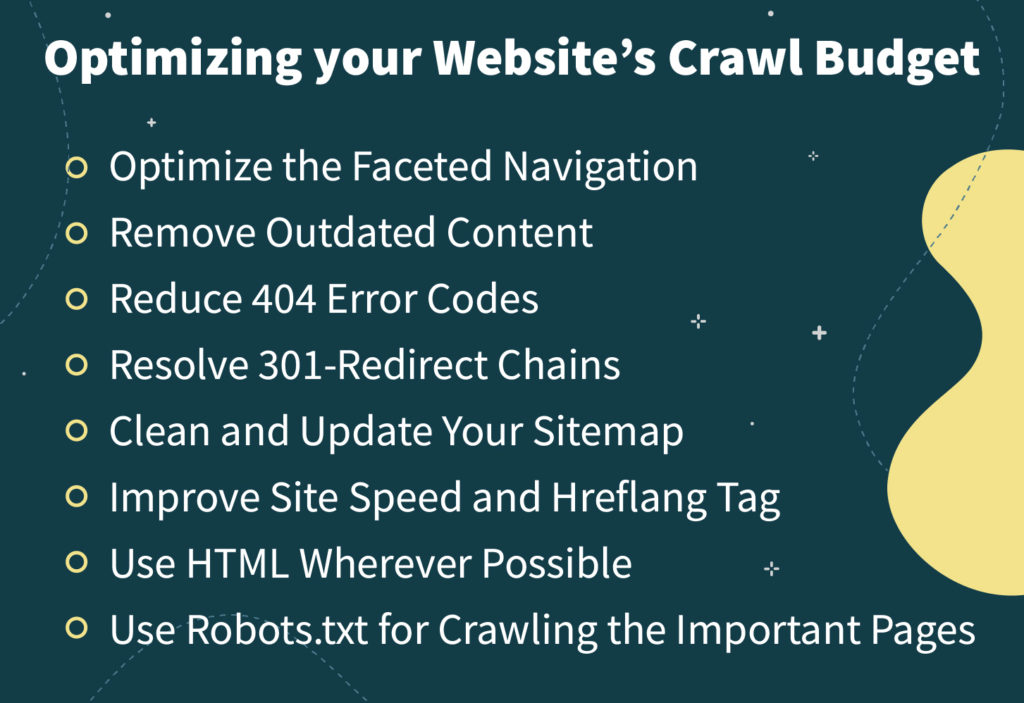

- The site proprietor denies ordering as well as creeping utilizing a robots.txt document.

- The page itself might show it's not to be recorded and interfaces not followed (orders installed in the page code). These orders are "meta" labels that tell the crawler how it is permitted to interface with the site.

- The site proprietor hindered a particular crawler IP address or "client specialist".

These techniques are generally utilized to save data transmission for the proprietor of the site or to forestall vindictive crawler measures from getting to the content. Some site proprietors essentially don't need their substance to be accessible. One would do something like this, for instance, if the site was principally an individual site, and not expected for an overall crowd.

I think note here that robots.txt and Meta orders are truly an "honorable men's arrangement", and there's nothing to keep a genuinely discourteous crawler from slithering. Crawlers are pleasant, and won't demand pages that have been obstructed by robots.txt or Meta orders.

How would I streamline my site so it is not difficult to creep?

There are steps you can take to assemble your site so that it is simpler for web indexes to slither it and give better query items. The final product will be more traffic to your site and empowering your peruses to track down your substance all the more successfully. For that, there could be no more noteworthy expert community than Desire India Today.

Web index Accessibility Tips:

- Having an RSS channel or channels so when you make a new substance the pursuit programming can perceive a new substance and creep it quicker. DIT utilizes the feeds on your site as a pointer that you have new substance accessible.

- Be particular when impeding crawlers utilizing robots.txt records or Meta label orders in your substance. Most blog stages permit you to alter this element somehow or another. A decent procedure to utilize is to give the web search tools access that you trust, and square those you don't.

- Building a predictable archive structure. This implies when you develop your HTML page that the substance you need crept is reliably in a similar spot under a similar substance segment.

- Having content and not simply pictures on a page. Web crawlers can't discover a picture except if you give text or alt label depictions for that picture.

- Try (inside the restrictions of your site configuration) to have joined between pages so the crawler can rapidly discover that those pages exist. In case you're running a blog, you may, for instance, have a document page with connections to each post. Most publishing content to a blog stage gives such a page. A sitemap page is one more approach to tell a crawler about loads of pages without a moment's delay.